Deploying JupyterHub on a multi-user CentOS server

I describe how I setup JupyterHub in a multi-user, Linux environment.

Where I currently work my colleagues and I do a lot of programming and data analysis. Our work method typically involves doing the data munging and programming locally on out laptops and using the HPC cluster for large or long running jobs.

To do our local work we heavily used Jupyter Notebooks. This worked fine but came with the typical headaches involved with doing all of your work locally.

In my current job I also act as the sysadmin for a few Linux servers in our basement. As we have have access to a large HPC clusters, these servers were mostly going unused. There was no problem SSH-ing into these machines, starting a Jupyter notebook server, and doing all the work there. But it was just annoying enough that nobody did it. The effort needed to log in, activate the environment, start the server, remember activate the tunnel, was just as much as just running it locally.

I wanted to set-up JupyterHub so it was as easy as visiting a URL, logging in, and doing. No SSH, no tunnels, no firewalls. Just click and go.

There are several documented installation methods and I couldn't get all of them to work. Some I got annoyed and stopped when I found myself chasing endless dependencies. So this post is mostly to document what it was I did, so I can remember it in the future.

System setup

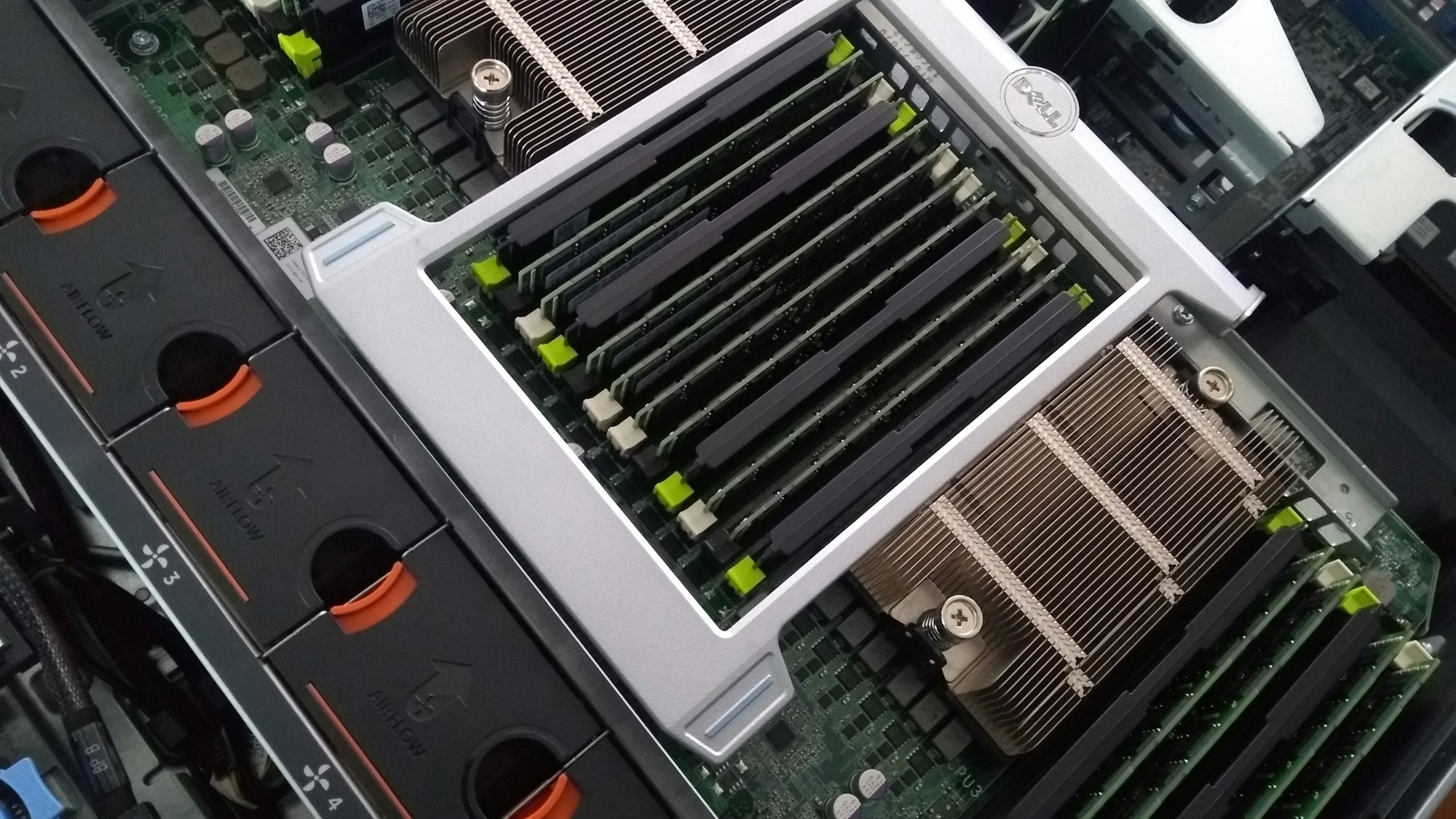

I was setting this up on a CentOS 7 machine with 96GB of RAM, 15TB of HDD storage, and 2 Intel Xeon 6-core processors

Escaping Dependency Hell

This was a fresh CentOS 7 minimal install, so it comes with virtually nothing. Not even vim. My first attempt was to just do a normal system install, starting with installing NodeJS. I immediately regretted this and gave up. While I am sure it could be done I got tired to chasing dependency after dependency. Instead I downloaded and used Anaconda. This massively simplified everything. Dependencies were automatically taken care of and the whole setup could be isolated to prevent users from accidentally breaking it. It was this simple:

conda install -c conda-forge jupyterhub

conda install notebook

And of course, do this in a dedicated conda environment (or in an isolated miniconda install).

Setting it up as a system service

Now I made sure it worked, could get through the firewall, and was accessible from elsewhere on the network. I also followed the instructions to generate a config file jupyterhub --generate-config and placed that somewhere users wouldn't be able to access. We were all good. So now I needed to make it automatically run and function as as systemd service. For future reference here is my jupyterhub.service file.

[Unit]

Description=JupyterHub Service

After=multi-user.target

[Service]

Environment=PATH=/opt/miniconda/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin

ExecStart=/opt/miniconda/bin/jupyterhub --ip 0.0.0.0 --port 8000 --config=/root/jupyterhub_config.py

Restart=on-failure

StandardOutput=syslog

StandardError=syslog

SyslogIdentifier=jupyterhub

[Install]

WantedBy=multi-user.target

I installed this to the correct location, enabled it, and started it. JupyterHub now could be accessed anywhere on the company network by entering the URL of the server into a web browser.

Fixing a log-in bug

Everything seemed to work at first. But once one user logged in once, they couldn't ever log in a second time. There is some weird bug with JupyterHub and Linux causing this, and this is how I fixed it. I edited the jupyerhub_config.py file and made this small change:

Inside that file is this one line. It needs to be un-commented out and set to False. Just like this

## Whether to open a new PAM session when spawners are started.

#

# This may trigger things like mounting shared filsystems, loading credentials,

# etc. depending on system configuration, but it does not always work.

#

# If any errors are encountered when opening/closing PAM sessions, this is

# automatically set to False.

c.PAMAuthenticator.open_sessions = False

Setting up Logrotate

Okay everything was working now. This last thing I did was mostly for quality of life for myself. I wanted to isolate the logs into their own location and have logrotate handle the logs

Since I set the Stdout and Stderr of the systemd process into the syslog, I wanted to capture those outputs and place them into their own log directory. I created the directory /var/log/jupyterhub/ for this purpose. Next I created the following file /etc/rsyslog.d/jupyterhub.conf

if $programname == 'jupyterhub' then /var/log/jupyterhub/jupyterhub.log

& stop

Now all jupyterhub output will be saved into that log file. To make sure this log doesn't get too large I wanted logrotate to mange it. I created this file jupyterhub and added it to /etc/logrotate.d/

/var/log/jupyterhub/jupyterhub.log {

rotate 10

missingok

notifempty

compress

daily

copytruncate

}

Now the log is automatically rotated daily and old logs deleted after 10 days.

Cull Idle servers

By default once spawned a users notebook will stay active forever. Because users are notoriously bad at shutting their notebooks down to free up the system resources, I wanted to have them automatically close if inactive for a certain period of time. JuypterHub provides a script called cull_idle.py to do this. But you need download it and to add it to the config file. This is what I added to jupyterhub_config.py:

import sys

c.JupyterHub.services = [

{

'name':'cull-idle',

'admin': True,

'command': [sys.executable, '/opt/miniconda/etc/cull_idle_servers.py', '--timeout=10800']

}

]

Conclusion

Now when this server boots up, JupyterHub is automatically launched and any user with an account on the system can visit the servers domain name or IP address. They can log into JupyterHub with their system credentials and a JupyterHub will spawn them their own Jupyter Notebook server to use. After a few hours of inactivity, jupyterhub will close their open notebooks and free any RAM which may have been used for variables in their notebooks.

I'm really happy with how this has been running over the past couple of months. It actually gets a lot more use from my co-workers than I initially thought it would.

Now all data and analysis can live safely one location, on RAID-ed hard drivers, and a cron job can handle a nightly backup.