A gentle introduction to parallel programming in Python

Python, be default, will only ever utilize a single CPU core at a time. All instructions in your code are run one at a time, in serial, on one core of your CPU. This leaves all of the other cores sitting there doing nothing.

Never tried parallel programming before? Here is how to get started with it in Python

Introduction

Modern CPUs have multiple physical cores on a single chip. Four, six, and eight physical cores are becoming extremely common on consumer CPUs. The current generation of Intel Xeon CPUs which are found in enterprise-level hardware can have up to 28 physical cores per CPU.

Additionally, if the CPU supports hyper-threading, each core can handle two tasks simultaneously. Therefore a 6-core CPU with hyper-threading can be viewed as having 12-virtual cores which can all be utilized independently.

Python, be default, will only ever utilize a single CPU core at a time. All instructions in your code are run one at a time, in serial, on one core of your CPU. This leaves all of the other cores sitting there doing nothing (well... not nothing, they are handling other stuff on your system, but not your code, and thats all we really care about).

The illustration below shows a simple example of generating 4 random strings. In serial (top), the rand_string() function is called 4 times in a row to generate 4 random strings. This could also be accomplished in parallel (bottom) by having 4 CPU cores each call rand_string() once and all at the same time. Theoretically, this would speed up computation 4X.

note: in this simple example, computation would not be sped up at all. It would more than likely take longer to execute this in parallel using python. That is because there is some overhead that occurs when launching parallel tasks. However, if your computational load is large enough, this would accelerate your computation 4X*

Python contains several modules in the standard library that allow you to perform tasks in parallel. However it is not quite as simple as just importing a library and saying go. You have to think about how your code operates a little differently. And of course, not all tasks can be parallelized.

At a very high level, Python allows you to handle parallelism in two different ways:

- Threads - Multithreading

- Processes - Multiprocessing

Each has it's own unique pros and cons and appropriate use cases.

Python Threads vs Processes

Python provides both a threading and a processing module to handle tasks simultaneously (concurrency).

From a high level, these models appear almost identical and seem to provide identical function. You could use them both side by side and from the keyboard it would appear they both performed exactly the same. However, how these modules are functioning differently under-the-hood are extremely important to understand to make sure your code is executing as efficiently as possible.

This section will cover a few main differences between the two

Differences in CPU usage

This part comes first because it is probably by far the most imporant. Multithreading in python will ONLY ever use 1 CPU core. You can create and excute as many threads as you want within your program, but you will never use more than 1 CPU core at a time.

Why is this? Well it is because Python multithreadding is not actually executing code in parallel. It just looks and acts like it. What is actually happeneing, is the CPU is quickly switching between each of the threads. This has to happen because of Python's infamous Global Interpreter Lock (GIL). You can read all about it here and here. In short the GIL allows only one thread to execute python code at a time. However, the GIL is released during input/output (I/O) operations (important to note for later) and can released by packages executing C-code directly (e.g. numpy).

That standard implementation of Python is written in C (CPython) and was first released in 1990 before the era of modern multi-core CPUs. Additionally, the memory management of all modules utilized CPython are not necessarily thread-safe so the GIL was created to ensure thread-safety in the Python language as a whole. The GIL was found to be the most performant solution at the time (and still today) in the case of single-theaded operation, which is the standard use of Python then and now.

But why is the GIL still around 30 years later? Well, there have been many proposals to remove the GIL from Python contributors, but nobody has found a good solution to it yet.

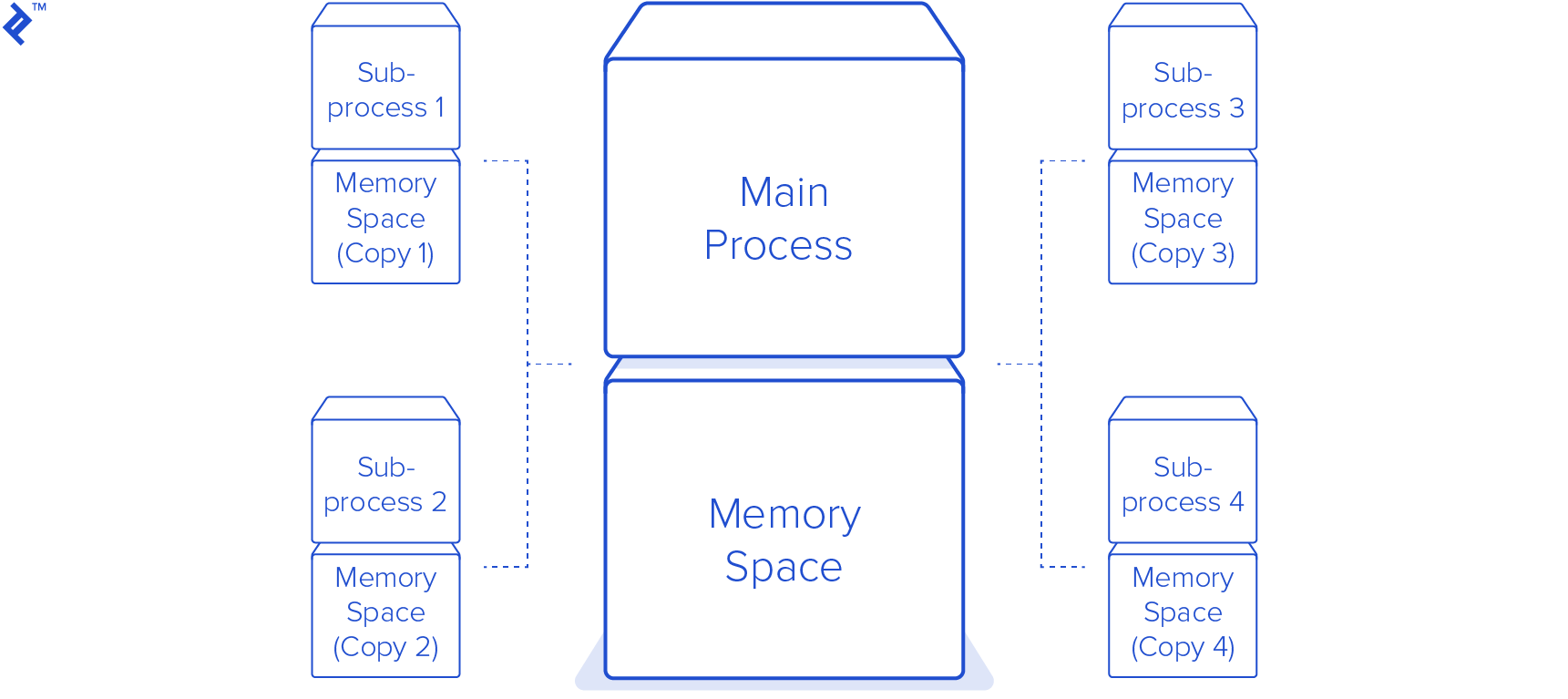

The solution for true parallel processing in Python comes from the multiprocessing library. Using this library gets around the GIL by spawning an entirely independent system process with its own Python interpreter. With this comes overhead; it takes time to spawn child processes and memory-spaces must be copied for each child process.

This means not only is multiprocessing limited by the number of CPUs you have. You must also have enough memory to hold replicates of the memory.

Differences in memory management

This leads to a discussion of the second important difference between Python thread and processes: How they utilize memory.

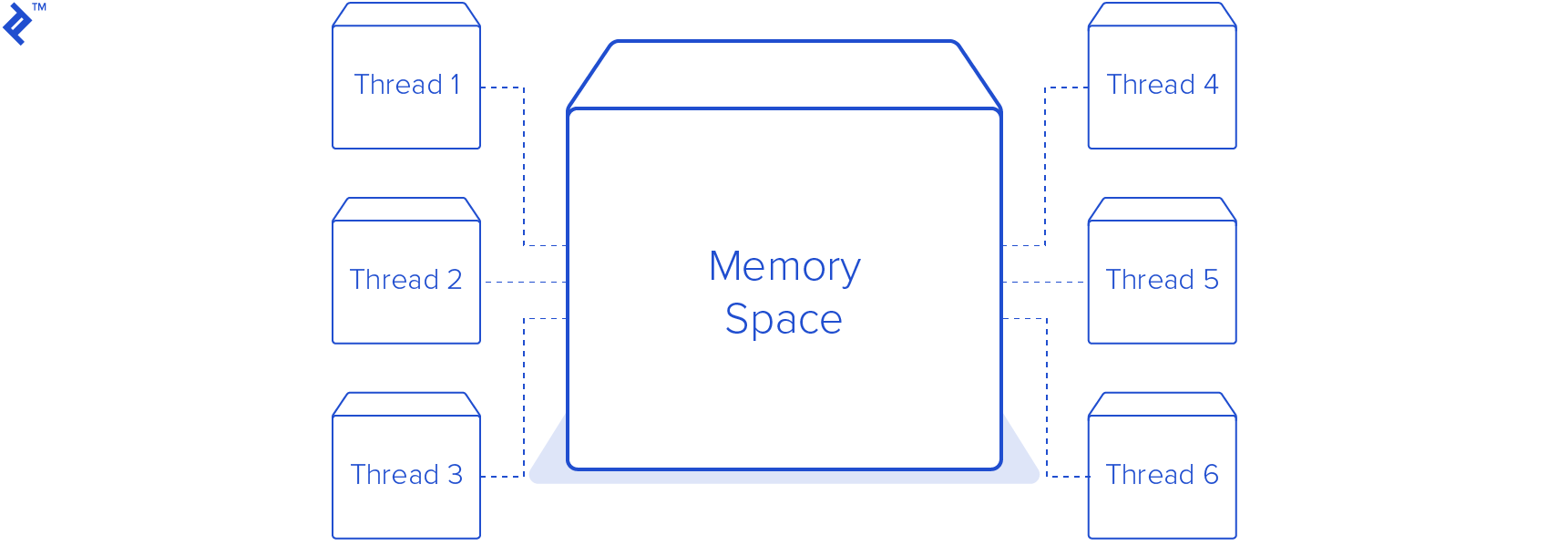

Threads all share common memory with their parent process. This is good in that: They do not need to create a copy of object for each thread, and they can easily communicate with each other through shared data. But caution: it is very easy to create race conditions where two threads try to access or modify the same memory object simultaneously.

Processes each contain a copy of the parnets memory-space. This means there is no worry about one process modifying a piece of memory while the other is trying to read it. But it also makes it more difficult to share data bewteen processes.

However, Python does provide some modules out-of-the-box which provide thread-safe mechanisms to share data between threads and processes. The Queue. Which will be demonstrated in the examples.

Should I use a Thread or a Process

The easiest way to determine which method you should use is to look at your program and decide: is my problem a CPU-bound problem or an I/O-bound problem. In other words does my program spend most of its time doing computations or does it spend most of its time waiting for I/O (reading/writing to disk, communicating over the network, etc).

If your program is I/O-bound, using threads will speed up the program, because I/O happens outside of the GIL.

If you program is CPU-bound. Threads will offer no increase in speed, because remember, the CPU is just switching back and forth between threads. However, using Processe you can speed up CPU-bound programs dramatically.

Programming Examples

Seeing the output and differences in run-times is very helpful when experimenting with these Python modules. So if you want to see some code demos please check-out my Jupyter Notebook on GitHub which contains a few working examples: https://github.com/canthonyscott/Into_to_parallel_programming_Python